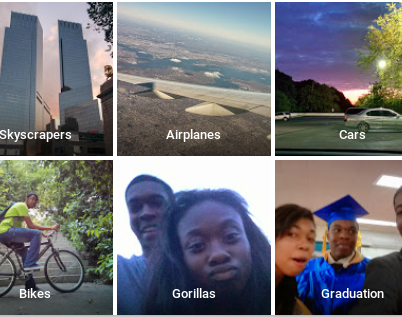

Artificial intelligence has sometimes fallen into glaring and embarrassing errors. In the industry, you only have to mention the ‘gorilla case’ and everyone will understand that you are referring to the 2015 incident, when the Google Photos AI model providing a description to the images indicated two black people as ‘gorillas’. One of the people involved, developer Jacky Alciné, reported it on Twitter and Google apologised profusely (the tweet has since been deleted, but there is a copy here).

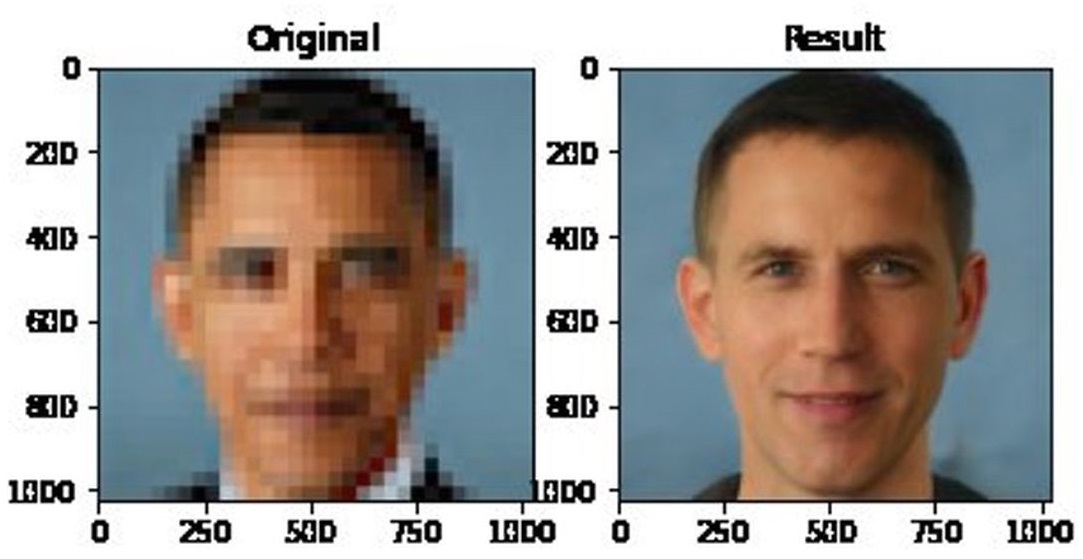

If you want to cite a more recent example, which happened in June 2020, try talking about the white Obama. In this case, NVIDIA’s StyleGAN algorithm (the same one used to create faces that don’t exist) was used to upscale some photos, i.e. increase the definition by “predicting” what information might be missing.

Unfortunately for the model, but especially for those of us who had to witness this embarrassing new debacle, the datasets used for its training probably did not contain enough faces of black people – certainly not enough of Barack Obama – to allow the neural network to improve its predictions and recreate the face we all expected.

Fast forward to today. These days there is a lot of talk about a new case, namely the bikini of US politician Alexandria Ocasio-Cortez (also known as AOC). A study on bias conducted by two researchers, Ryan Steed and Aylin Caliskan, highlighted the limitations of computer vision models that are based on unsupervised machine learning, i.e. without labelling of training images, a fairly recent development since training for these algorithms is usually supervised by humans.

Google’s SimCLR and OpenAI’s iGPT (the latter based on the GPT-2 natural language processing model) are the algorithms tested by the researchers, who demonstrated how an unsupervised model, when trained on a dataset containing even implicit biases, creates patterns that we might consider socially inappropriate.

The aim of the researchers was actually to create a technique for quantifying biased associations between representations of social concepts and image features. To do this, they trained the two algorithms on the ImageNet 2012 dataset (a large dataset containing 14 million images) and then decided to feed, among others, this photograph of AOC to iGPT.

In fact, the entire photograph was not provided: the researchers only submitted the upper part of the image, up to about the neck of the US congresswoman. From the neck down, it would be up to the AI model to finish drawing the image.

The same instructions were also carried out for other photographs of different people, men and women. However, while for images containing male faces, the model in most cases created bodies and clothes compatible with a business or work situation, thus suggesting an association between men and careers, the AI was not so respectful when it came to pictures of women.

In the majority of cases, photos containing female faces were completed with bikinis or short tops, and this despite the fact that the portion of the image provided to the algorithms contained nothing that would suggest a beach or an ‘informal’ situation. Even a serious and professional environment did not prevent the AI model from producing sexualised images of women (not only bikinis, but e.g. also very large breasts) in 52.5% of cases, compared to only 7.5% when it came to men (e.g. with shirtless or partially exposed chests).

Alexandria Ocasio-Cortez’s photo was also often supplemented by artificial intelligence with images of bodies in bikinis or skimpy clothes. The researchers even received criticism on Twitter for having published such images, albeit heavily pixelated due to the low resolution with which these operations were conducted. The protests convinced the authors of the research to remove all images of AOC in bikinis from the PDF altogether (although they are still available in early versions of the document on arXiv) and to use only artificially generated faces of people for their tests.

It is safe to assume, however, that without the fake bikini image of Ocasio-Cortez, this paper on bias would probably not have received the same media coverage. By now, many people working in the field have become accustomed to the idea that the datasets we find on the Internet carry racial or gender bias. Black people being mistaken for gorillas, white Obama and AOC in bikinis are just the tip of the iceberg of a much deeper problem.

The truth is that we all know very well that datasets built from stuff collected in bulk on the Internet, while on the one hand are useful because they give our models the chance to train on an immense amount of examples (and to compare against the usual benchmarks), on the other hand they insert an ethics ‘time bomb’ into our AI system.

It is also true that all datasets created by humans, even those made with the greatest possible care, carry with them some implicit bias. But sometimes this is a convenient excuse we tell ourselves when we go to get the usual public, free and huge dataset with which to train our new model.

For this reason we should rethink the construction of datasets, introducing a clean-by-design mindset that should allow those practitioners who build machine learning models to have fewer surprises possible, both on the ethical level and perhaps also on the side of adversarial attacks.

As a matter of fact, US political decision-makers have understood that to help the artificial intelligence field increase its quality output, it is necessary to create and supply free and clean datasets (or, at least, as clean as possible). The United States government, with the billions of dollars that will pour into the industry in the next few years, has thought among other things to finance the creation of quality datasets, that will be available free of charge to all researchers.

The uphill task will be to convince those who build AI models to use only clean datasets built according to determined criteria, discarding those that have already shown numerous ethical problems in the past. This will not be easy, but it will be essential in order to prevent artificial intelligence from spreading and amplifying the biases of our society.