In August, several researchers at the Stanford Institute for Human-Centred Artificial Intelligence (HAI), an important laboratory also known for its annual AI Index Report, published a study entitled On the Opportunities and Risks of Foundation Models. The new Center for Research on Foundation Models (CRFM), a centre created to conduct research on these models, was also presented.

But what exactly are Foundation Models?

Foundation Models, as the researchers immediately clarify, are essentially Large Language Models (LLM), such as OpenAI’s GPT-3 and DALL-E, or Google’s BERT. However, according to the Stanford exponents, these models are so vast and so important that merely calling them LLMs is no longer enough. They are critical but, as the study presentation states, still incomplete.

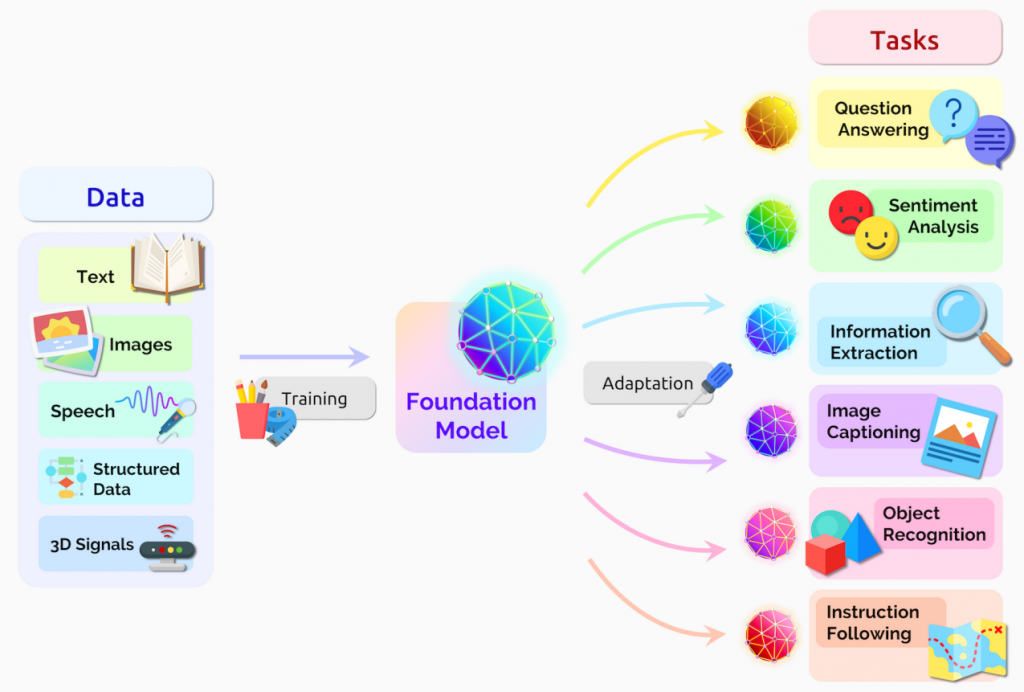

Another reason why researchers have moved away from the Large Language Model definition is that Foundation Models are not limited to simple language processing. They can extend to vision (as DALL-E does), audio, and more structured signals. Their effects, then, do not end with a trivial reprocessing of input: these huge models can generate content, answer questions, analyse emotions, recognise objects, interact with humans in complex tasks. Adding a multimodal architecture would allow them to integrate these disparate functions into a single environment, helping to create new use cases and new ways of interacting with humans.

Finally, Foundation Models can lead to emergent properties, i.e. ‘implicitly induced rather than explicitly constructed’ behaviours (just think of GPT-3’s ability to learn from context, adapting its output according to users’ suggestions, a property that was not foreseen by the developers), and encourage what the text calls homogenisation, which we could also refer to as harmonisation of techniques: third party developers tend to create similar AI models and approaches inspired by the Foundation Model, applying them to a wide variety of different tasks.

In short, they are, to use the challenging expression used by the Stanford researchers themselves, a paradigm shift for artificial intelligence.

The more than 200-page report takes an in-depth look at the opportunities and risks of Foundation Models, from their capabilities (language, vision, robotics, reasoning, human interaction) and technical principles (architectures, training procedures, data, systems, security, evaluation, theory) to their applications (health, education, law) and social impact (bias, prejudice, misuse, economic impact, environmental impact, legal and ethical considerations).

Yet, the Stanford initiative has attracted a lot of criticism. For example, Meredith Whittaker, director of the AI Now Institute, commented on this move as “an attempt at erasure” of the term Large Language Model, given that in recent years LLMs have been guilty of many of the ethical missteps attributed to artificial intelligence. “If someone Googles ‘foundation model,’ they’re not going to find the history that includes Timnit’s [Gebru, ed.] being fired for critiquing large language models. This rebrand does something very material in an SEO’d universe. It works to disconnect LLMs from previous criticisms.” Whittaker continued.

Equally caustic was Jitendra Malik, a professor at the University of California, Berkeley, and a leading expert in computer vision, who during a workshop on Foundation Models (a workshop I recommend you follow on YouTube if you’re interested in the subject) stated “I think the term ‘foundation’ is horribly wrong. These models are really castles in the air. They have no foundation whatsoever.”

Judea Pearl, 2011 Turing Prize recipient and one of the leading experts in causal inference, has also entered the controversy, asking polemically via Twitter what a Foundation Model is. According to Pearl, there is no fundamental principle that gives these models the name ‘foundation’.

There is also a concern on the part of many researchers, even from adjacent disciplines, summed up by the linguist Emily Bender who, again on Twitter, wrote: “LLMs & associated overpromises suck the oxygen out of the room for all other kinds of research.”

And this is perhaps the greatest concern – which, incidentally, I also feel – when someone grants the title of ‘foundation’ to AI models that first of all have demonstrated worrying limitations (not only bias but also the inability to perform very simple tasks such as arithmetic operations) and secondly cannot certainly represent all of the research expressed today by the multitude of disciplines that make up artificial intelligence. Deep learning, on which Foundation Models are based, is certainly artificial intelligence, but not all artificial intelligence is deep learning. Furthermore, even when we only consider deep learning, Foundation Models certainly do not cover all the research and all the applications of DL; many successful examples like the DeepMind models are based on specific and specialised architectures. Neither AlphaGo nor AlphaFold is a Foundation Model.

What seems to be the case, if I may try to guess some kind of hidden agenda, is that the motivations that pushed Stanford and HAI to adopt the term Foundation Models, not to mention creating an entire research centre around them, are of a more opportunistic nature.

Let’s not forget that LLMs have been developed by industry players (Google with BERT, Microsoft and OpenAI with GPT-3 and DALL-E, AI21Labs with Jurassic-1), especially because the resources needed to train them are prohibitive for anyone but large companies. Therefore, the academic world has mostly been watching, being overtaken at great speed by Big Tech, resorting to study these models mainly from the outside.

Opening a centre specialised in LLMs, changing their name into Foundation Models and compelling everyone else to play along seems to me like an attempt by Stanford to forcibly grab the LLM flag. They probably won’t be able to wave it alone, since the university, however emblazoned, does not have the resources of a Google or a Microsoft. But this move might allow them from now on to claim a place at the big-boys table wherever LLMs are concerned.

And they might even succeed, provided that everyone else lets them.

The post Foundation Models: paradigm shift for AI or mere rebranding? appeared first on Artificial Intelligence news.